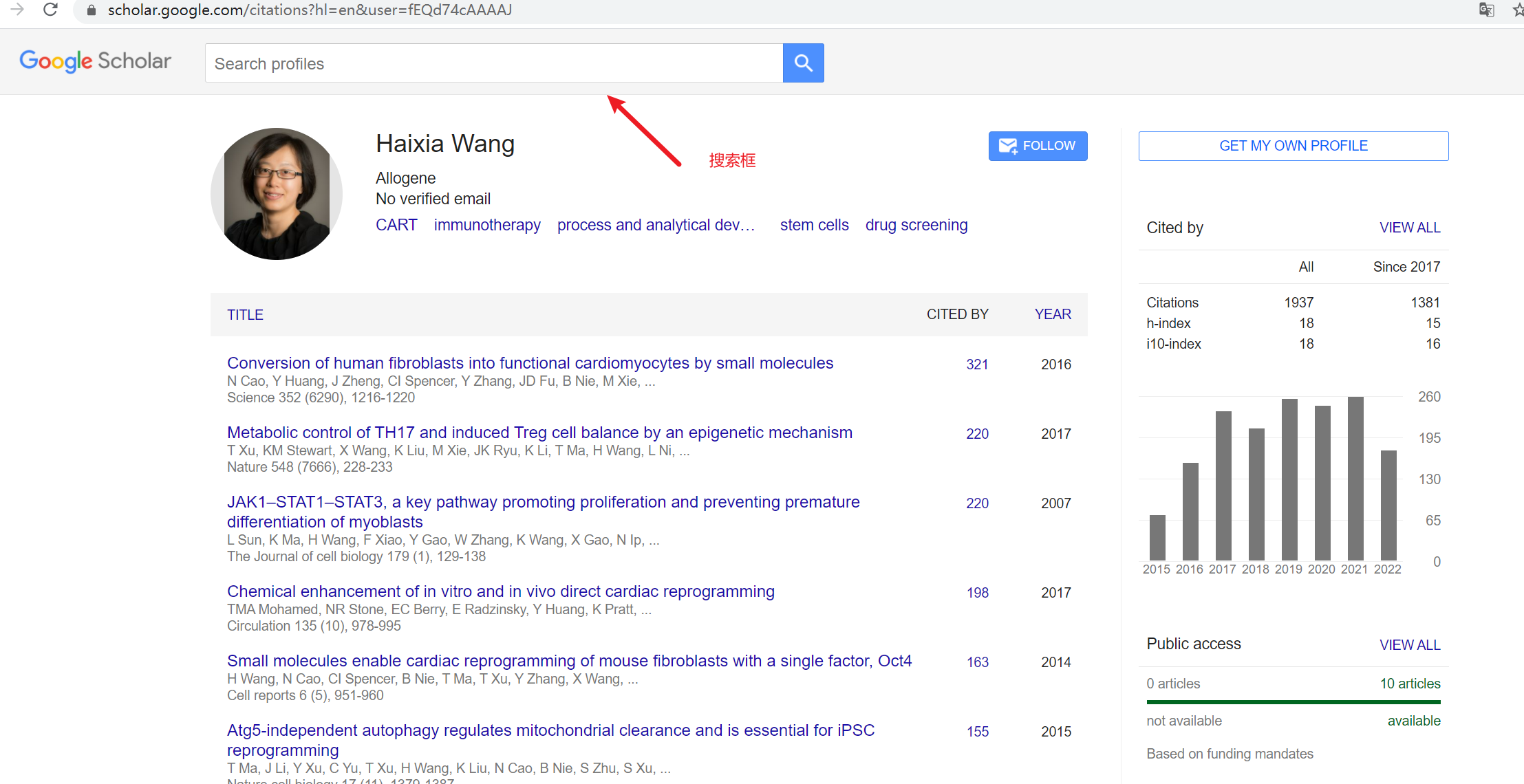

今天接到任务,给我几个人名采集他们在谷歌学术上自己的论文数据,字段包括论文标题,作者,引用数,期刊会议,发表时间等,话不多说,直接用代码实现,进入谷歌学术官网(需要翻墙实现),找到人名搜索框,如图所示

如图所示搜索框,改页面已包含全部所需数据,话不多说,直接上代码

import requests

from lxml import etree

import re

import pandas as pd

headers = {

'cookie': 'SID=PAhWmAWWikPJThzwR_jS546ISU2OAHRgtF1eqr3bQUDrDXZ4Vdo1GuEy0BKjK5RMhQS1qA.; __Secure-1PSID=PAhWmAWWikPJThzwR_jS546ISU2OAHRgtF1eqr3bQUDrDXZ4zV3PeW3Yz3khOurIC6nUjQ.; __Secure-3PSID=PAhWmAWWikPJThzwR_jS546ISU2OAHRgtF1eqr3bQUDrDXZ4Eid3rdD8Ai8Y--DYmHd2hQ.; HSID=AetAl0I0TdVuXmlVZ; SSID=A8W392Q1DtZiazS8t; APISID=FdZiBbZcgOXiV2oN/AfDGCpf4dEr_kj1K1; SAPISID=ildLYr2jtyApljoJ/AWHimn7B7G7CFZqY8; __Secure-1PAPISID=ildLYr2jtyApljoJ/AWHimn7B7G7CFZqY8; __Secure-3PAPISID=ildLYr2jtyApljoJ/AWHimn7B7G7CFZqY8; GSP=LM=1665983795:S=SL7wTz1dWfrquo4e; AEC=AakniGMVK4oL-uh9oIT07sltVkUJO6UjGyN8wLdgxGwUVfMgeb56M4nFiWM; NID=511=vLSYLrwXqWM9a5DpVG9o0Jk0LfRUecL3btSKDKjz0WGx8ZqAZeZJShDRDJwXZt_dL7_R5a8VlYKnnLLBH-uzPXUgISKmpr-9FqTuofqecUu1oaPMwfnhZHuz2KM5QRSs40w8RLnBypyLN6YyOXt2CIm5w86EbTL7WxfC5NrcHBLQiRyfb6iuT3ojUvTY_oETy9ZAFUfXpbCf0K4Q2LqcrW201V5o3WpI02PuvmJm-Wb1RAnbex0OaIQ0X26TpNrnl6rtCJebTpmE; SEARCH_SAMESITE=CgQI3ZYB; GOOGLE_ABUSE_EXEMPTION=ID=170e9c4c5037e2c5:TM=1666936190:C=>:IP=154.53.61.129-:S=hS92BPLMiJ7sm5vAJL6O3Q; 1P_JAR=2022-10-28-05; SIDCC=AIKkIs0OTfgMnAs-R5ovnaapRNsBn0Z0gTCe4hhWlK3hIOTA1kq6aSOP0nO09GxmOVzg5K3htEE; __Secure-1PSIDCC=AIKkIs2OObSKjFOvQ6sKIAQ_jmm_MAwiqaakua63Bmg91Af45lxZIZeXjfPnGo2mcEBXDPvzdw; __Secure-3PSIDCC=AIKkIs2eEPMxtvaZRt5CfdxM-0MCMTO5yXKnAQY5Y8HF2voo9856exF4PsTVgaRPllRNUeyDRA',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.114 Safari/537.36',

}

proxies = {

'http':'127.0.0.1:10809',

'https':'127.0.0.1:10809'

}

real_user_id = []

ids = []

def get_id():

api = 'https://scholar.google.com/citations?view_op=search_authors&hl=en&mauthors=Haixia+Wang&btnG='

r = requests.get(api, proxies=proxies, headers=headers)

html = etree.HTML(r.text)

users_links = html.xpath('//div[@id="gsc_sa_ccl"]/div[@class="gsc_1usr"]/div/a/@href')

contents = [ii.text for ii in html.xpath('//div[@id="gsc_sa_ccl"]/div[@class="gsc_1usr"]//div[@class="gs_ai_aff"]')]

print(contents)

if len(users_links) == len(contents):

for use, co in zip(users_links, contents):

if co:

if 'Zhejiang' in co and 'Technology' in co:

real_user_id.append(re.findall('.*user=(.*)', use)[0])

print(real_user_id)

def get_msg(id):

titless, update_timess, yinyong_numss, authorss, qikanss = [], [], [], [], []

for pg in [0,100,200]:

url = f'https://scholar.google.com/citations?hl=en&user={id}&cstart={pg}&pagesize=100'

data = {

'json': '1'

}

r = requests.post(url,headers=headers,data=data,proxies=proxies)

results = r.json().get('B')

if results:

html = etree.HTML(results)

qikans = [ii.text for ii in html.xpath('//td[@class="gsc_a_t"]/div[2]')]

titles = [ii.text for ii in html.xpath('//a[@class="gsc_a_at"]')]

yinyong_nums = [ii.text for ii in html.xpath('//a[@class="gsc_a_ac gs_ibl"]')]

update_times = [ii.text for ii in html.xpath('//td[@class="gsc_a_y"]/span')]

authors = [ii.text for ii in html.xpath('//td[@class="gsc_a_t"]/div[1]')]

qikanss += qikans

titless += titles

yinyong_numss += yinyong_nums

update_timess += update_times

authorss += authors

print(authors)

print(len(authors))

print(qikans)

print(len(qikans))

print(titles)

print(len(titles))

print(yinyong_nums)

print(len(yinyong_nums))

print(update_times)

print(len(update_times))

detail_links = ['https://scholar.google.com'+ i for i in html.xpath('//td[@class="gsc_a_t"]/a/@href')]

print(detail_links)

print(len(detail_links))

df = pd.DataFrame({

'标题':titless,

'年份':update_timess,

'引用次数':yinyong_numss,

'作者':authorss,

'期刊/会议':qikanss

})

df.to_csv('导师谷歌学术.csv',index=False,encoding='utf_8_sig',mode='a',header=False)

if __name__ == '__main__':

ky = ['ronghua liang']

get_msg(ids[3])

以上代码为思路,不可直接运行,纯属记录我的工作思路

如果上述代码帮助您很多,可以打赏下以减少服务器的开支吗,万分感谢!

赣公网安备 36092402000079号

赣公网安备 36092402000079号

点击此处登录后即可评论